Research

|

Scientists: "Why is there so much misunderstanding of science?" Grad student: "I want to do some public outreach." Scientists: "What a waste of time." Brief Bio I obtained a Master in Physics (or "Diplom" in German) from the University of Heidelberg. For my master thesis I spent a year at the Universitat Pompeu Fabra (UPF) in Barcelona. I obtained a PhD from the École Polytechnique Fédérale de Lausanne (EPFL), and then mover to work at the Institute of Cognitive Neuroscience (ICN) at University College London. Since September 2021 I am a lecturer in Psychology at Newcastle University. |

Current Work

My research focuses on the computational mechanisms underlying spatial navigation and spatial memory. I am particularly interested in how behavior and cognition can be related to mechanistic computational models, and how current ideas about spatial memory (e.g. the role of place cells and other spatially selective cell types) can be extended to episodic memory in general. Other topics of interest include motor pattern generation, biologically inspired robotics, and large-scale brain models (in particular computational models of high-level cognition).

See below for descriptions of recent work

- Recognition memory via grid cells

- A neural model of spatial memory and Imagery

- Reference frame transformations for head direction (to be added)

- Navigation in cluttered environments (to be added)

- Models of spinal central pattern generators (to be added)

- Large scale integrated models (to be added)

- Models of spinal motor control (to be added)

My research focuses on the computational mechanisms underlying spatial navigation and spatial memory. I am particularly interested in how behavior and cognition can be related to mechanistic computational models, and how current ideas about spatial memory (e.g. the role of place cells and other spatially selective cell types) can be extended to episodic memory in general. Other topics of interest include motor pattern generation, biologically inspired robotics, and large-scale brain models (in particular computational models of high-level cognition).

See below for descriptions of recent work

- Recognition memory via grid cells

- A neural model of spatial memory and Imagery

- Reference frame transformations for head direction (to be added)

- Navigation in cluttered environments (to be added)

- Models of spinal central pattern generators (to be added)

- Large scale integrated models (to be added)

- Models of spinal motor control (to be added)

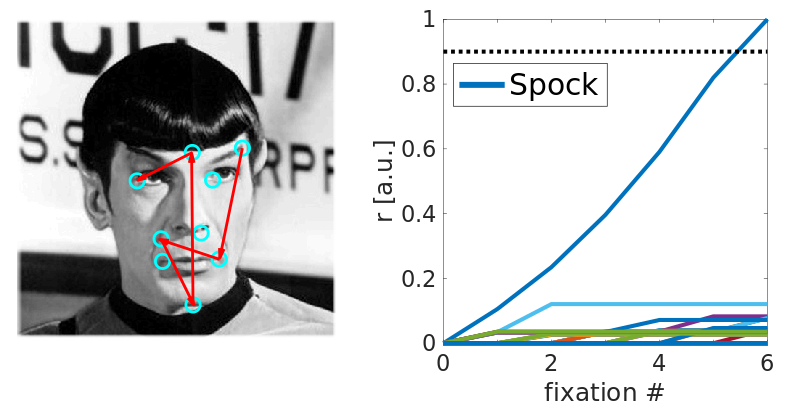

Recognition Memory via Grid Cells

A neural model of spatial memory and Imagery

|

Episodic and Spatial Memory

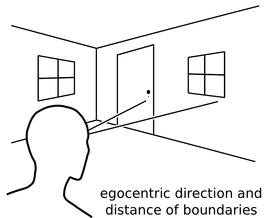

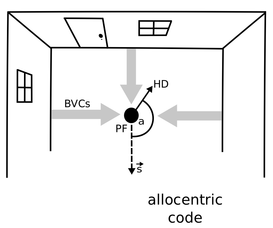

Recalling life events can be thought of as ‘re-experiencing’ them in imagery. For example, having met someone at the train station, one can later conjure up a mental image of that scene (e.g. the person at a given distance and direction from oneself, against the backdrop of the train station, a train to you left, etc). That is, the individual elements of the scene (the person, the train ...) and their spatial relationships are part of the memory. But how is this processed implemented at the level of single neurons and interacting neural ensembles? A Computational Model of Spatial Memory and Imagery We have built a computational model that shows how the neuronal activity across multiple brain regions underlying such an experience could be encoded and subsequently used to enable re-imagination of the event. The model provides a mechanistic account of spatial memory and imagery, including cognitive concepts such as ‘episodic future thinking’ and ‘scene-construction’. It also explains how different types of brain damage might differently affect these types of cognition, e.g. producing different aspects of amnesia. Egocentric-Allocentric Transformations An important aspect of the model is that when we perceive a scene our sensory experience is encoded ‘egocentrically’ by neurons responding to objects according to their location ahead, left, or right of oneself. However, neurons in memory-related brain areas such as the hippocampus represent our location ‘allocentrically’ relative to the scene around us, as observed in the activity of place cells, head direction cells, boundary- and object-vector cells, and grid cells. The model explains how egocentric representations are transformed into allocentric representations which are then memorized in the connections between cells in and around the hippocampus. Importantly, this transformation can also act in reverse. That is, the activity patterns of neurons encoding elements of a scene in egocentric terms are re-instantiated by synaptic connections originating in memory-related brain areas, as opposed to having been driven by sensory inputs during perception. Thus memories can drive imagery of a scene corresponding to the original event. The same mechanism can also be used to imagine scenes from viewpoints that have not been experienced, such as those that might correspond to a future event. |

Reference Frame Transformations for HEAD DIRECTION

|

To be added soon

|